As Digital Scholarship Program Manager for Harvard LibrarY (and, formerly, as Head of Emerging Technologies for the University of Oklahoma Libraries), I explore/develop/deploy tech for research and instructional purposes.

Below are a few examples, and here is a CV link detailing work projects, professional service, and associated Talks/publications. Please don't hesitate to reach out - via the Personal page - to collaborate.

Deployed in virtual and augmented reality, 3D models provide the means for researchers and students to remotely experience diverse scholarly materials first-hand, though this content seldom finds its way into institutional repositories or peer-reviewed literature where it could be reused and cited. Currently, these methods are dispersed; an no single discipline, institution, or practitioner has yet to document a truly citable 3D curation method.

The IMLS-funded 3D Research Data Curation Framework (3DFrame) grant is our attempt to conceptually unite interrelated - but administratively disparate - 3D data production, (immersive) analytics, and preservation methods, which combine to connect a range of computational processes. Our goal: ensure the scholarly rigor of 3D contents, thereby preserving these materials as credible (i.e., FAIR) primary sources for downstream citation by researchers across disciplines. Here’s 3DF so far…

Professor Zack and I have been working on the issue of scholarly 3D/VR for about a decade, since the release of the Oculus DK1. Mainly, we’ve focused on getting VR out of the lab and into the classroom, specifically by providing practical guidance and publishing on the instructional benefits. Increasingly, content has been the issue, not a lack of interest. However, academic rigor for 3D contents remains an issue.

Accessible scanning techniques like photogrammetry have partially solved the content problem, but the scholarly value of these outputs isn’t measurable. To address the question of curation, we’ve dedicated part of 3DF to studying the-state-of-the-3D-production art, across academic and cultural heritage institutions, and another part (specifically, research question 4) to understanding the potential impact of quality control methods for 3D content in immersive viewing environments.

Everyone is doing 3D scanning and viewing a little differently, depending on their training, discipline (and budget), home institution mission, and no one is quite sure what constitutes a “good” model at the end of the day . So, we must first understand what’s currently being done, and why…

At the core of 3DF is travel. The narrative specifies a range of 3D scanning lab types, where the research team will observe, interview, and test current and future (XR-enhanced) workflows. Insofar as we are most interested in the quirks and idiosyncrasies, you can think of this approach as a sort of ethnography. That is, we seek the sort of secret knowledge that disproportionally inform immature data types like 3D. Science and Technology Studies (STS), whose researchers have studied knowledge creation in weather centers and on research vessels is a useful reference.

The Irshick Lab and its DigitalLife3D project was our first stop in our mission to document the messy true story of 3D data production. There, at UMASS in the spring of 2024, we were given a behind-the-scenes look at both the methods and challenges associated with live animal scanning. Then, in February, we spent a week at Utah, witnessing contrasting end user communities representing public libraries (SLCPL) and flagship universities (UofUtah). Next will be sites geared towards tomographic (e.g., MicroCT) capture, large-scale plant science, and - at one of our home bases in Cambridge, MA - photogrammetric cultural heritage preservation.

By the end of the grant period (2026) we anticipate visiting upwards of 10 distinct institutions, ranging from public universities, to the Ivy League, to cultural heritage institutions (i.e., museums). This diversity of research data will let us triangulate and then publish on the state and trajectory of 3D data curation, before extrapolating scalable methods that might help future practitioners, whatever their discipline or institution type. Immersive viewing is central to our forward thinking deliverables…

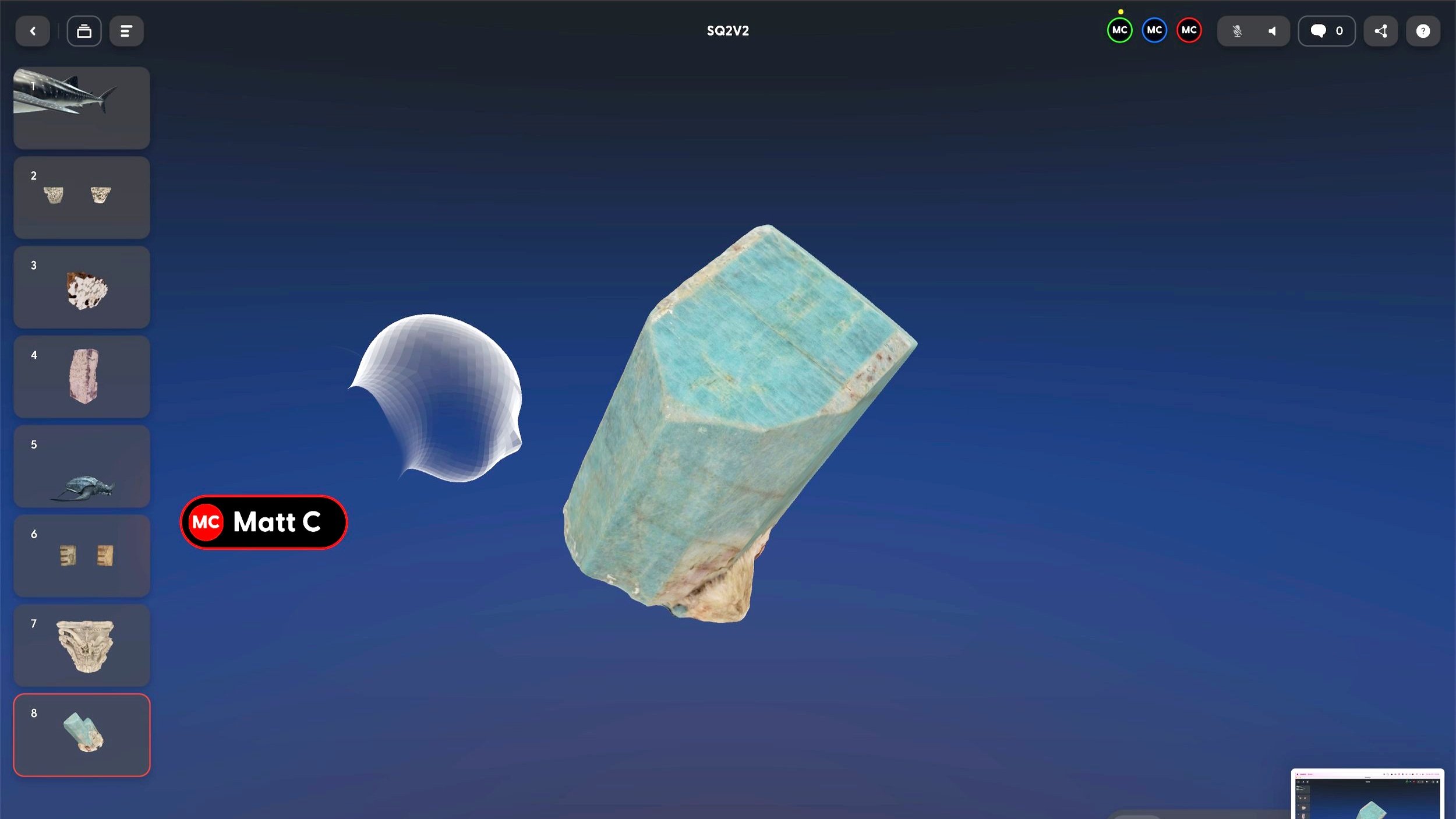

Getting back to research question #4 (RQ4), we have a viable protocol and promising early participant data comparing the performance of immersive and “flat” (traditional display-based) viewing experiences for 3D future quality control workflows. The experiment we’ve developed to gather this data begins this prompt:

Today you will be conducting quality control on 3D models. Momentarily, you will be prompted with a fictional scenario and asked to respond to a question. It is important that you limit your verbal response to "yes" or "no" only. Do you understand? We will now begin with a practice scenario.

From there, participants are presented with a series of scenes and scenarios, each of which represents quality issues commonly encountered by 3D practitioners. Questions of mislabeling, feature identification, data loss, and resolution are all posed, and a combination of self-reported (cognitive load and usability) and performance (time and accuracy of task completion) data-gathering methods are deployed.

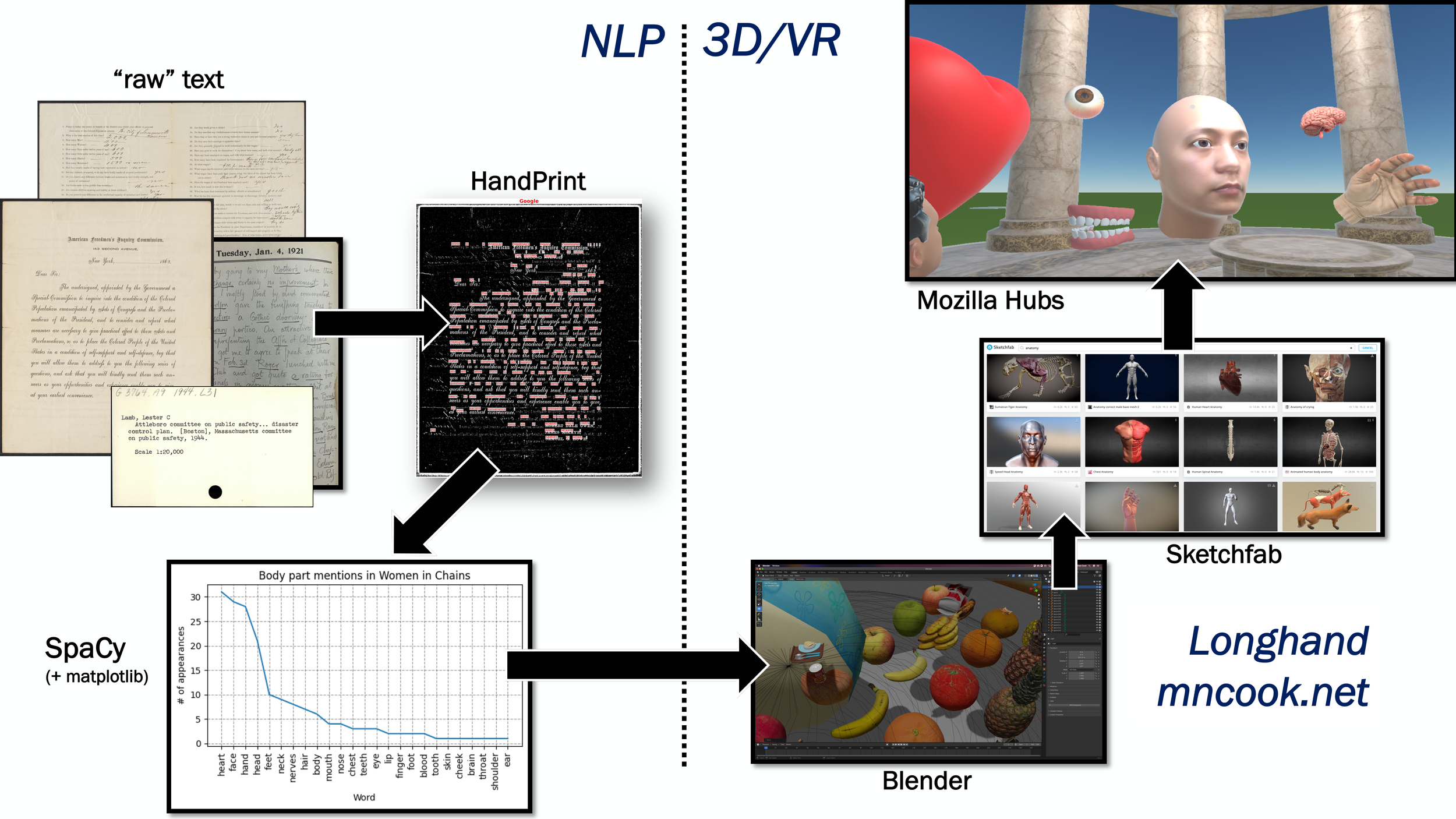

Longhand

Computational workflows can generate machine-actionable data from “raw” (e.g. handwritten) textual source material, allowing the search of vast material collections. But while a keyword search is a useful way to collate and confirm hypotheses, it assumes the researcher has some ideas about where to begin; some existing research questions. Keyword search results don’t reveal the nature of a corpus as a whole though, nor do they represent the relationships between tokens whose source material might span media, time, or location.

So, how might one glimpse the contents of a text corpus, to generate preliminary research questions that might inform downstream search and more sophisticated analyses related to topics, sentiments, parts of speech, or named entities? Visualization – charts, graphs, diagrams, word clouds, etc. – are helpful at this exploratory research stage, when a researcher is simply trying to grasp the contents of a text corpus. This is where Longhand comes in.

Longhand is a word cloud generator, but the “words” are 3D models projected in 360 degrees around the user. Longhand exists to explore unwieldy text corpora (including in virtual reality) earlier in the research lifecycle. In addition to exposing text-centric researchers to the historically STEM-oriented benefits of Reality (e.g. depth cues, body tracking, etc.). Longhand leverages our, “…ability to rapidly report object identiy or category after just a single brief glimpse of visual input” (DiCarlo & Cox, 2007).

Check out some preliminary test results, below, and the GitHub repo for more.

Widener 360

Isometric perspective, with expanded annotation (featuring historical imagery), on the Matterport-hosted Widener Library 3D scan.

Given the increasing size and complexity of research data generally, and the recent advancement of scanning and visualization methods specifically (e.g. photogrammetry and virtual reality), 3D data has the potential to become the asset “of record,” or primary source material, for researchers in a wide range of academic disciplines. Moreover, this content can be produced for objects of study at various scales, including large-scale facilities, like Harvard’s very own Widener Library.

Among other applications, digitized library facilities can host virtual visits for non-affiliates, who would typically not be allowed inside private libraries like Widener. This “virtual tours” scenario was our initial motivation for the Widener 360 project, which relied on local experts at Archimedes Digital - and the increasingly popular Matterport scanning/hosting platform - to generate interactive 360 views for some (but not all) of our most iconic interior spaces.

But, as we began annotating the scan with historical imagery, links to Harvard Library materials, and historical information concerning the inspiring architectural history of the building, we began to understand the linked data implications of these virtual facilities. With Widener 360, our stunning architecture functions as a sort of visual index for collections, services, and history. Given the scale of the facility, there’s plenty of virtual space within which to deploy multimedia content. Indeed, one can even hide content (i.e. easter eggs) as we managed to do through a Spotify integration, which allowed for in-browser audio associated with Professor Lepore’s new The Last Archive Podcast.

Spotify-hosted podcast “hidden” in the book stacks. The podcast can be played in the browser.

We’ve seen an encouraging number of site visitors to the tour page in the few months since it has gone live, and I imagine other libraries are seeing similar uptake. These scans also represent an online media type that transcends the traditional wall-of-text, the “Brady Bunch” call experience (e.g. Zoom), and the YouTube rabbit holes, all of which we are now experiencing ad nauseam. 3D content, like the Widener 360 tour, is a spatialized experience that is a very familiar aspect of our offline lives.

Importantly, these content types also supports stereoscopic, VR viewing (by clicking the little headset icon on the lower right portion of the screen), for example, and, once headset hardware becomes more common - say, with the release of the Apple glasses - and XR web architecture is standardized (as per Mozilla), we will reach a point where remote visitors can engage with this content bodily as well. That is, users will be able to physically walk through virtual scans of spaces - in the company of fellow students and instructors.

Decimating and cropping Widener scan data for use in shared online environments, like Mozilla’s Hubs platform.

Instructional chess

Using Oculus Medium's "Clay" tool to model the knight piece

Motivations

There are an estimated 600 million chess players worldwide and a diverse body of peer-reviewed literature speaks to the benefits of learning the game, especially for children. Indeed, some of the most compelling research involves young children (as young as 4), whose spatial concept awareness was strengthened after chess training.

At the beginning of the summer (2017), I set a personal goal: To sculpt something each week in VR then attempt a 3D print of that work. Basically, I wanted to test what would print and what wouldn't - to see where the freedom of sculpting in a virtual environment ran up against the reality of FDM printing.

Well, I'm a pretty helpless as visual artist, but the combination of that regularly scheduled activity, and a simultaneous series of chess games with friends and family, gave me an idea: An instructional chess set to help with early childhood chess instruction and engender associated benefits (spatial skills).

Brainstorming instructional chess piece design in my pocket notebook.

VR Modeling

Complete blindness to the goings-on in your physical surroundings is both a strength and a weakness of virtual reality. First, the bad: complete eye coverage makes people uncomfortable, especially in public spaces, where a hand on your shoulder can't be predicted and is seldom appreciated. The benefits of complete immersion may well counterbalance this perceived vulnerability, however. Insofar as approachable game design software (e.g. Unity, Unreal, etc.) makes crafting unique VR experiences a single-person endeavour, scholars - instructors in particular - can leverage this real-world obliviousness to strip away distraction and present to the learner only that content deemed relevant. VR modeling software, like Oculus Medium, is a great example of mostly beneficial full immersion.

The human mind is beholden to the human body, and specific anatomical axis- of limb and head/foot orientation, for example - constrain not just our movement, but our thoughts as well. But what if we stripped away the visual cues associated with parallel physical constraints like gravity, or the horizon line, and were able to create a workspace in a vacuum, a deeps-space studio? Now, imagine if all your making tools were within reach, simultaneously, regardless of their mechanical complexity. By customizing the sculpting environment and dedicating time to familiarizing yourself with the variety of tools available to the user instantaneously, one can quite quickly inhabit a creative environment where the medium itself (virtual "clay", in this case); the environment within which that medium is modified; and the tools for modification are all divorced from the constraints of analogous physical counterparts.

This is this conceptual context within which I imported existing (and freely available ) CAD chess models for reinvention within Oculus Medium. After diagramming, in a paper notebook, some movement concepts, I sat down to model each piece in virtual reality. I began with the knight - the crux of the instructional chess "problem" - and moved on from there. After approximately 10 hours of in-headset design time, I had a prototype of an entire chess set. While it may sound like a relatively low number, this sort of engagement was only practically possible given the 10-Series NVidia GPU currently powering VR in my Alienware 15 work laptop. To hit framerate targets for comfortable, long-term VR, this late generation hardware is an absolute must. Indeed, the combination of 1070/1080 grade graphics processing hardware and software like Medium represents - to my mind - the first in what will be a suite of "productivity grade" VR applications. Next step: 3D Printing this first design...

Early knight design demonstrating the flexibility of VR modeling.

Prepping and Printing

While it's a clear step towards a VR-based rapid prototyping solution, Medium isn't a full-fledged CAD solution.Rather, Medium is an artistic outlet that can be co-opted (so to speak) for downstream output that resembles products rather than sculpture. Straight line design tools; real-world scaling; and associated measurement capabilities are all noticeably lacking, and some model cleanup - outside a VR design environment - is therefore necessary prior to 3D printing. To level the piece bases and close any remaining "cracks" in the pieces, for example, I passed each through Autodesk's Meshmixer application. Fortunately, Meshmixer - as well as the CURA slicing program we use to generate gcode for our Lulzbot printers - is a freely available.

Next, it was time to 3D print the first physical instantiation of Instructional Chess. To print an entire side (since I would have to print each side in a different color) required approximately 34 grams of PLA filament for a CURA-estimated 300 minute 3D print. That's sixteen pieces - eight pawns and eight back rank pieces. As of now, I've iterated about four times on the models that comprise a full, printed side of Instructional Chess.

The first semi-successful print revealed a host of issues. Most noticeable was the disproportional scaling between the traditional, centered reference pieces, and the modeled directional cues, which printed much larger than then appeared in virtual reality. Indeed, it was exceedingly difficult to identify differences between the bishop and the rook, for example, so it was "back to the (virtual) drawing board" for a relative re-scaling of this piece components. Another major, continuing issue is the knight, which has raised arches to represent the jumping ability of the pieces and a somewhat hooked head, both features that require support material. My next goal is to revisit the knight design, in Oculus Medium, and see if the newly developed "Move Tool" can be used to connect the knights head to its body - sort of natural support workaround. I believe the entire set can be printed without support material if the knight could be fixed.

Early instructional chess prototype printing on LulzBot Mini

What's Next?

Print out a set for yourself! The complete Instructional Chess 3D model set is downloadable from Sketchfab (for free), and I'll be posting 3D printing instructions shortly, to ensure your set prints cleanly and efficiently. Importantly, libraries - of all sorts - offer 3D printing services, which you can use to create your own Instructional Chess set. Just consult this handy map, load the Sketchfab model files onto a flash drive, and you are ready to start teaching/learning the game. Looking forward to hearing your feedback and iterating on this design.

The Sparq labyrinth is an interactive meditation tool. With a touch-screen interface, the Sparq user selects from a variety of culturally significant labyrinth patterns and then engages (i.e. walks, performs yoga, or even dances) the projected pattern to attain a refreshing connection to the moment. This five-minute mindfulness technique requires no training, and has been linked to decreases in systolic blood-pressure and increased quality of life, which makes the Sparq the perfect wellness solution for your stressful workplace.

How can we be sure? Because the Sparq has been deployed across the nation in a diversity of different settings. Indeed, everyone from academic researchers (and stressed out students) - at the UMass Amherst, the University of Oklahoma, Concordia University, and Oklahoma State University - to Art Outside festival goers; to Nebraskan wine tasters have experienced the benefits of this interactive mindfulness tool.

The Sparq provides for a uniquely personal meditation experience. With touch-screen access to a variety of patterns - each representing a distinct cultural heritage - the Sparq users connect with history while reconnecting with themselves.

Unlike traditional labyrinth installations, the Sparq is mobile and (after the components have shipped) it can be set up in about an hour. This ease of installation, combined with the stunning beauty of the projected patterns, makes the Sparq a wellness solution suitable for nearly any workplace

The Sparq provides for a uniquely personal meditation experience. With touch-screen access to a variety of patterns - each representing a distinct cultural heritage - the Sparq users connect with history while reconnecting with themselves.

Ready for a Sparq? Contact me, and I'll make available tons more information about the thinking/motivation behind the Sparq, links to documented benefits, and instructions concerning how to set up the system at your institution. Then you can experience for yourself the myriad benefits of the Sparq meditation labyrinth.

In Pima & Papago (native American) cultures the design below represents "Siuu-hu Ki" - "Elder Brother's House". Legend has it that, after exploiting the village, the mythical Elder Brother would flee, following an especially devious path back to his mountain lair so as to make pursuit impossible. Elder Brother's House is one of several culturally significant labyrinth patterns which lend a powerful gravity to the overall Sparq experience.

"Hypnose" - Rapid Prototying project

Bruton, Eric. Clocks & Watches. New York: Hamlyn Publishing Group, 1968.

OU Libraries' new makerspace/fab lab/incubator Innovation @ the EDGE is centered on the idea that demystification of emerging technology is critical non-STEM engagement. Since my academic background is in the humanities (philosophy), a demonstration of rapid prototyping that takes inspiration from our large collection seemed important. Hence, the Hypnose smell-clock - a mostly 3D printed prototype that incorporated microcontroller components, and programming, inspired by the sorts of historical examples described in History-of-Timekeeping texts found in the book stacks (as above).

Bronze Head of Hypnose from Civitella d'Arna

The original motivation for the Hypnose was simple: there are problems associated with waking up and checking one's smartphone to figure out if it is indeed time to wake up! Of course, alarms are a solution, although they aren't necessarily a pleasant way to start your day. Moreover, there are temptations (e.g. social media) associated with picking up your phone in the middle of the night. How to avoid the phone, then, and still get up for work in time? Why not train myself to subconsciously to wake up on time by associating different phases of my sleep cycle with distinct scents?

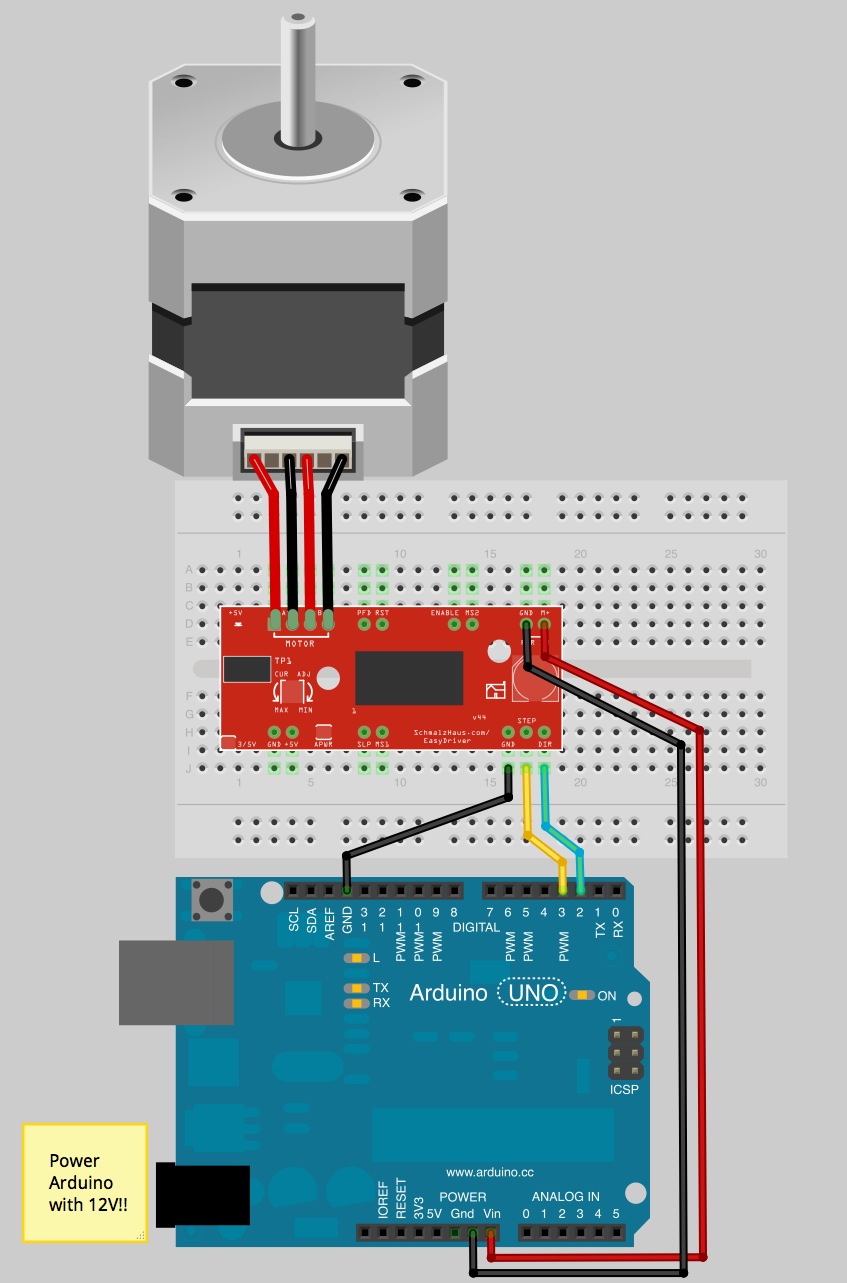

https://www.sparkfun.com/tutorials/400

This implementation used an Arduino Uno along with a SparkFun motor shield to power a stepper motor via a wall outlet. The precise rotational control provided by a stepper motor (as opposed to a torque-heavy servo) allows the below code to "jump" a measuring spoon - containing a small amount of scented wax melt - to a position directly above a heat lamp. This jump is programmed to occur every hour (3,600,000 miliseconds in Arduino code time), which can be easily doubled to cover an 8-hour sleep cycle, given four spoons. A certain wax melt, then, would always correspond to the final two hours before one awakes. I will undoubtedly come to dread that smell!

int dirpin = 2;

int steppin = 3;

void setup()

{

pinMode(dirpin, OUTPUT);

pinMode(steppin, OUTPUT);

}

void loop()

{

int i;

digitalWrite(dirpin, LOW); // Set the direction.

delay(3600000);

for (i = 0; i<400; i++) // Iterate for 4000 microsteps.

{

digitalWrite(steppin, LOW); // This LOW to HIGH change is what creates the

digitalWrite(steppin, HIGH); // "Rising Edge" so the easydriver knows to when to step.

delayMicroseconds(1000); // This delay time is close to top speed for this

} // particular motor. Any faster the motor stalls.

// particular motor. Any faster the motor stalls.

}The assembly, originally modeled in Sketchup (above), takes its cue from a 1st century bronze sculpture discovered in central Italy. According to Wikipedia, Hypnos' cave had no doors or gates, lest a creaky hinge awake him. It seems we both faced similiar problems. Also, this ancient realization of the greek god of sleep, conveniently lacked eyes, which are actually holes in the sculpture. My thinking was that the scent could vent from those holes with the aid of a small computer fan, although the final prototype uses Hypnos as more of an aesthetic choice.

The Hypnose "face" - an amalgamation of a free, low-poly mask model found online and a set of wings, scaled and rotated - ultimately took close to 8 hours (and 3 tries) on our Makerbot printer, but the finished prototype works more or less perfectly. More importantly, OU Libraries now offers free training on all the tech associated with this project, so those once-intimidated humanities majors (like myself) can leverage that creativity they are known for, inspired perhaps by source material in our collection, to design and deploy their own creations.

We are in a second proof-of-concept stage for a mobile app that guides users through large indoor while providing a plethora of location-based info and relevant push notifications (e.g. events, technology tutorials, etc.) along the way. The ongoing OU libraries-based pilot program has paved the way for a campus wide rollout of this cutting edge technology. This tier two launch coincides with the Galileo’s World exhibition, which debuted in August of 2015. The tool now provides:

Integration of Online/offline University of Oklahoma user experience by providing real-time, turn-by-turn navigation.

Delivery of hyper-local contents, corresponding to the users location with respect to campus resources both indoors and out.

Powerful analytics capabilities, which allow for the analysis of space/service/technology usage throughout navigable areas.

Various associated utilities to assist disabled users as well as aid in emergency situations.

People tend to refer to the central routing feature as “indoor GPS”. It’s accurate at up to a meter and it fulfills a goal we started focusing on early last year: simplify an extraordinarily complex physical environment.

Bizzell, after all, is huge – and filled with services (some of which I’m barely familiar with myself). What we didn’t want, then – and is something I've seen personally - is a senior level undergraduate proudly proclaiming that they are using our facilities for the first time.

Basically, our aim from the beginning was to put an end to the intimidation factor that new students might feel when visiting the library for the first time while at the same time making our diverse services visible to visitors using an increasingly prevalent piece of pocket-sized hardware, the Smartphone.

At the end of the 2015/16 academic year – the first semester where the NavApp was available for (free) public download – ~2,000+ unique users had downloaded and engaged with this innovative wayfinding tool. Indeed, our engagement factor was particularly encouraging with back-end analytics indicating that, on average, individual users accessed more than 16 in-app screens.

Finally, the press has been responding positively the NavApp and we've even received national awards for our work on this project. Please reach out to find out how to deploy your wayfinding tool.

After months of R&D, OVAL 1.0 is ready for use. With this hardware/software platform, instructors and researchers alike can quickly populate a custom learning space with fully interactive 3D objects from any field. Then, they can share the analysis of those models across a network of virtual reality headsets - regardless of physical location or technical expertise. In this way, you are free to take your students or co-researchers into the "field" without leaving campus!

CHEM 4923, group RNA fly-through.

Not only are previously imperceptible/fragile/distant objects (like chemical molecules, museum artifacts, historical sites, etc.) readily accessible in this shared learning environment, but - using our public facing file uploader - even the most novice users can easily drag-and-drop their 3D files into virtual space for collaborative research and instruction in virtual reality. Simply upload and sit down to begin.

Custom fabricated, library-designed VR workstation - courtesy of OU Physics dept.

Finally, natural interaction types - like leaning in get a closer look at a detailed model - are preserved and augmented by body tracking technology. When coupled with intuitive hand-tracked controls (one less piece of software to learn!), and screenshot + video capture functions for output to downstream applications (e.g. publication + presentations), new perspectives can be achieved and captured to aid your scholarship.

"The impact on the students this week was immeasurable", says one OU faculty member who has already incorporated the OVAL into her coursework. How can we help you achieve the same impact? Please reach out for a personal consultation and let OU Libraries show you how this powerful tool, which is currently available for walk-in use in Innovation @ the EDGE, can support your educational goals.

3D Scanning - Experiments & Implications

My current professional focus on 3D visualization has led to experimentation with a host of scanning solutions. Basically, the goal is a more accurate digitization - an interactive snapshot with searchable/browsable depth.

The 3D assets below were generated using a the Sony DSC-RX100 (for capturing high-definition, multi-angle stills of the specimens) and Autodesk Memento (for stitching those stills together into a surface mesh).

Please reach out, via the personal page, if you have a collection/antique/artifact/specimen that you would like to see preserved in this robust digital format.

The above prickly pear scan isn't perfect, but it's the only usable botanical scan that I've managed to generate after a half-dozen tries. Narrow-width connecting components (e.g. stems) in particular seem to disappear during Autodesk's cloud-based stitching process, which would explain why this opuntia came out while numerous capsicum scans did not. Lesson learned.

This statue of Omar Kayyam is located in the heart of OU's Norman campus. Fortunately, it was an overcast day when the scan was done, otherwise the direct sunlight would have reflected off the white stone. The statue is quite tall (about 8 ft.), however, so the imperfect top of this Persian polymath's cap was sliced off in post production. Diffuse light and multi-angle access are necessary for a good scan.

As described on the spatial page, this Sheepherder's cabin represents a "field scan", whereby off-grid artifacts can be manipulated, analyzed, or otherwise investigated after the fact for details that onsite limitations (like time) simply won't allow for. Measurements, for example, can be made and recorded later, after the threat of rattlesnakes has long since passed.

VR-based analysis of early 20th century sheepherder's ruins. Note the measurement tool.

Combining a few best-practices gleaned from generating high-quality field scans like the sheepherder's cabin with the ability to effectively scan certain living, albeit static, organisms (plants, that is), mean that 3D asset repositories of invasive flora, or endangered orchids, or entire crops are feasible and perhaps inevitable.

Downstream analysis of these 3D assets can not only take place centrally - at the local institute of higher-ed, for example - but at the expert's leisure. Moreover, screen capture software means that new perspectives on distant/fragile/rare data-sets can be output for presentation and publication regardless of whether that perfect viewing angle was attained at the time of the scan.